IPD Investigation - Library Database Contention (iOS)

IPD Investigation - Library Database Contention (iOS)¶

Introduction¶

The following document aims to shed light on the user-perceived IPD (UP-IPD) journey from the client-side and possible contributors to higher latency. Although this document primarily addresses iOS, some of the insights gained may also be relevant to Android due to the similarity in their Playback architecture. While we have set a goal to improve IPD by 30% this year, we recently investigated an issue where high IPD latency was reported by our leadership. The team has spent time diving into several different directions including Weblabs treatments, Bitrate quality, etc. Here are some other useful documents related to this effort:

Executive Summary¶

Although the team is currently investigating the latency regressions that were reported by leadership and there are likely several factors contributing to the latencies observed during those events, we have identified one significant cause of latency on iOS related to contention for the Library Database. Overall, there are several components that can introduce latency across the client-side IPD journey which are tightly correlated with Networking and I/O conditions. The playback flow on the client-side involves interacting with local and Amplify DB operations, network API calls including MuSe and CloudQueue, and the local playback engine (Harley), which in turn deals with other API calls such as DMLS. For more details see User-Perceived IPD Overview. There are also additional latency challenges that pertain to initiating playback from Recently played as described in Latency Challenges from Recently Playback Flow.

Background¶

Both the Android and iOS apps require tracks to be saved in the internal database before they can be played. This architecture was established before the evolution of Amazon Music into a streaming service, when it was primarily an MP3 store with a library concept. Specifically on iOS, a single WriteQueue is associated with any Library Database operation. Sync calls may update the Database with new information, which can cause contention with the playback flow that also requires Database access to ensure that tracks are written to and/or read from the DB. Sync calls occur periodically (every 10m on iOS, 30m on Android) and in response to user actions, as well as during cold starts. Anecdotally, most of the extreme cases of IPD latency we have experienced has been during cold starts or when resuming the app from the background. [](http://Next Steps )Next Steps outline our plan to address this issue going forward.

User-Perceived IPD Overview¶

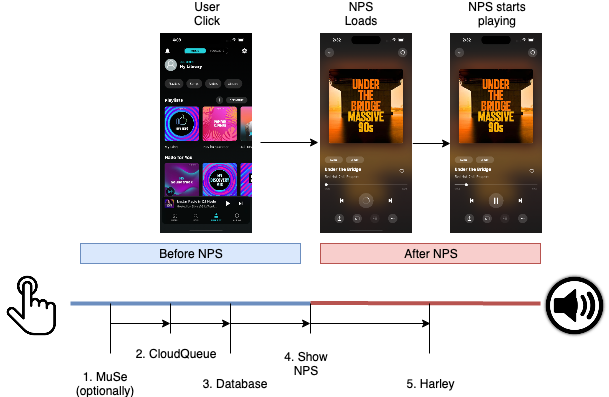

The user-perceived IPD (UP-IPD) is the duration between a user’s click and the rendering of audio. In our visual apps, this also includes the time it takes to display the NowPlaying screen or Stage before the audio is rendered. Given the current architecture, it is useful to divide the UP-IPD into two phases: before and after the NPS/Stage is displayed since different operations occur during each phase. Thus, if we observe that most of the time is spent before the NPS is displayed, it is likely due to CloudQueue, MuSe, or DB involvement, while if it is after the NPS is displayed, it is likely due to Harley’s involvement, such as bitrate quality, etc. Note there are cases where we actually do not display the NowPlaying screen and only the playback is controlled by the mini-transport, but we will focus here on cases where we do show NPS as it covers the majority of the flows and provides more visual clues on what’s happening under the hood:

Edit link

Muse: Depending on usecase, MuSe page API may be called to fetch tracks metadata (for example

getArtistDetailsMetadata). API calls to MuSe can be a source of latency if the clients are under poor networking conditions. The following CR is meant to capture the total request/response time that will help us determining when this is the case: https://code.amazon.com/reviews/CR-90877419/revisions/1#/detailsCloud Queue: for online interactions (like Stations) when we don’t have content locally already we call

initiateQueueif we have the data locally we write it to Amplify datastoreDatabase: Tracks need to be inserted in the Database in the current architecture. After most DB operations, we also kick off operations to ensure the integrity of the DB, removing orphaned albums/artist entities when necessary.

Show NPS: at this point we kick off showing the NowPlaying screen (or Stage)

Harley: receives the track metadata from the clients and attempts to start audio playback, bitrate quality, calls to DMLS etc would affect only from this point onwards. Note that currently Harley’s IPD only reports the time from when it’s invoked from the client until playback renders. Meaning current IPD does not count for #1, #2 and #3 steps.

Currently, if any of the operation #1, #2, or #3 take longer, the customer would be waiting without any visual clue that things were processing. A simple CX improvement, is to decouple NPS/Stage showing from these calls. This way, the loading and animation of rendering NPS/Stage to screen would mask some of the latency as well as provide a mechanism to show things are loading (spinner). This is planned to be addressed in MUSICMOBILE-15873.

Playback Sequence Diagrams¶

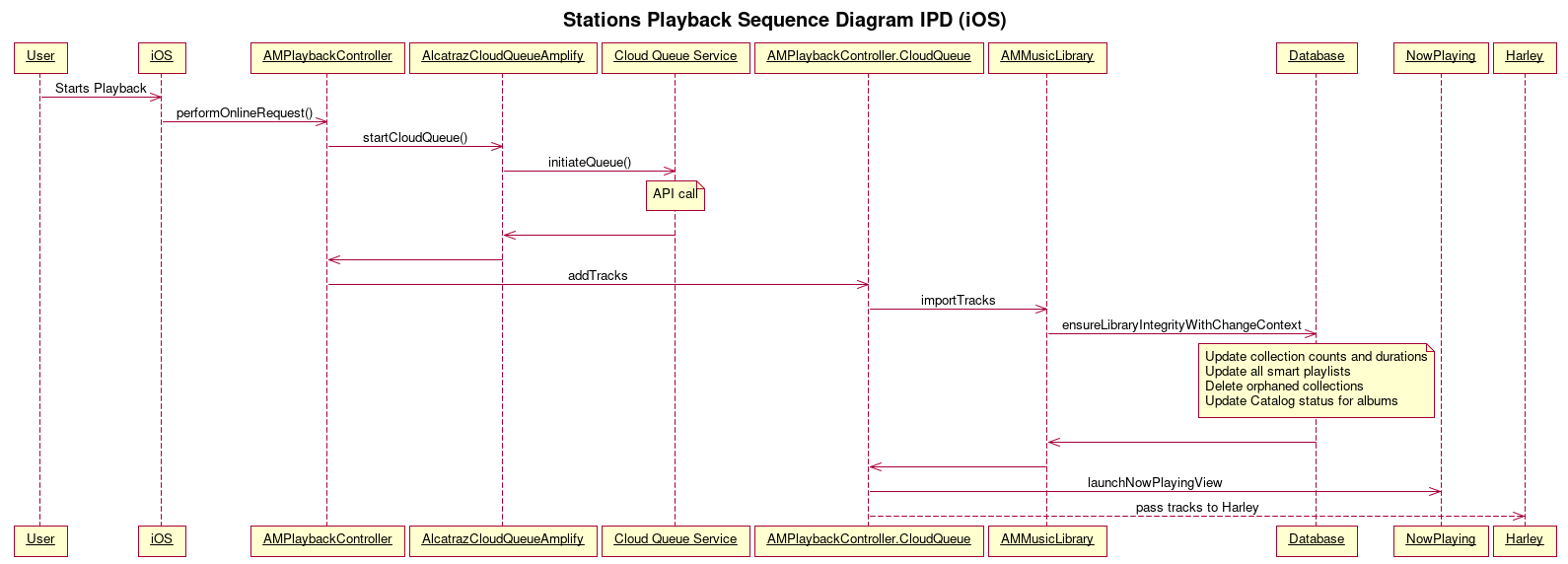

Recently Played Stations Playback flow¶

This is an high level summary of the playback sequence for Stations on iOS, from user click until NowPlaying is invoked and before playback request is handed off to Harley.

Edit link

As the naming suggests, the call to ensureLibraryIntegrityWithChangeContext aims to ensure the library database is up to date with any ASIN changes, updating collection counts and durations, deleting orphaned collections and updating catalog status. This method is invoked each time playback is invoked as well as during the sync operation. The logs recorded by QA when they were able to reproduce a 14s playback delay suggest this operation was running over and over during a sync operation:

2023-05-02 23:29:18.486 [<NSThread: 0x2830efcc0>{number = 16, name = (null)}] <AMMusicLibraryCloud: 0x281741710> will delete orphaned albums from the device library

...

2023-05-02 23:29:18.930 [<NSThread: 0x2830efcc0>{number = 16, name = (null)}] <AMMusicLibraryCloud: 0x281741710> will delete orphaned albums from the device library

...

2023-05-02 23:29:19.347 [<NSThread: 0x2830efcc0>{number = 16, name = (null)}] <AMMusicLibraryCloud: 0x281741710> will delete orphaned albums from the device library

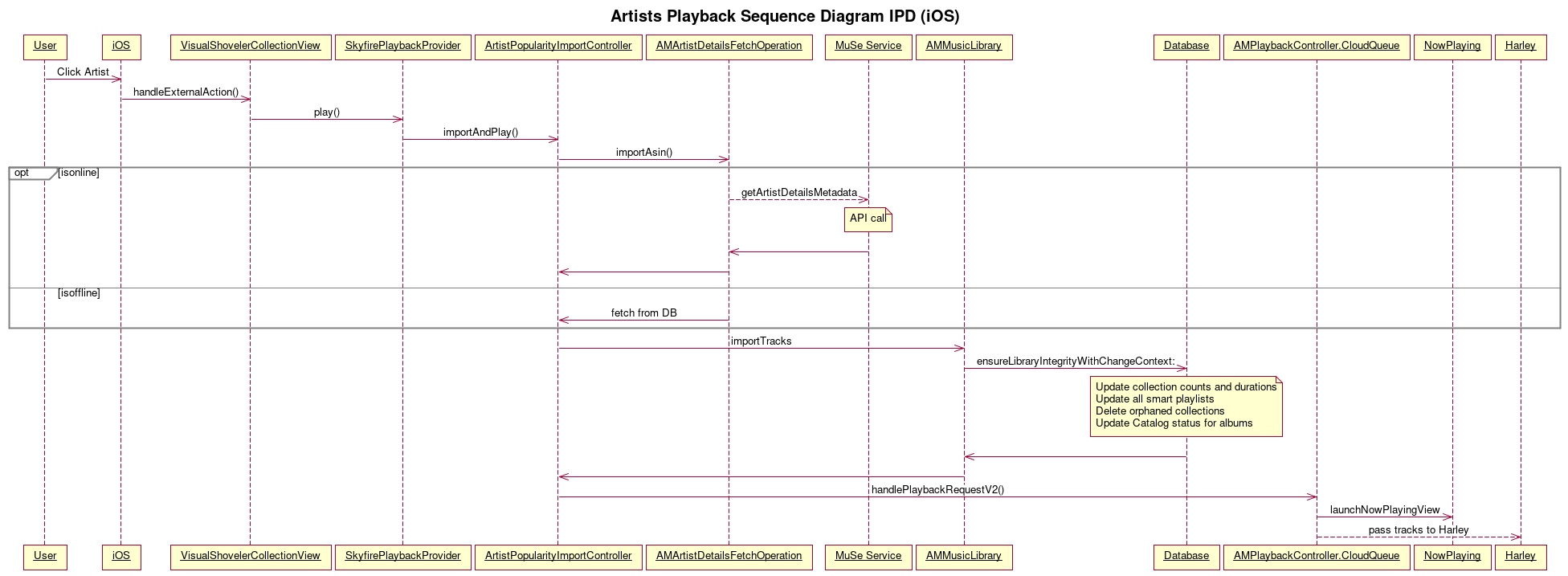

Recently Played Artists Playback flow¶

This sequence diagram represents the high level flow for Artist playback on iOS started from Recently Played / Library page.

Edit link

Note that in addition to the Database contention problem, this flow also has a call to MuSe to fetch getArtistDetailsMetadata which could introduce additional latency delays. We should explore improving our caching strategy, to not always rely on MuSe if we already have the content cached locally (within certain expiration thresholds).

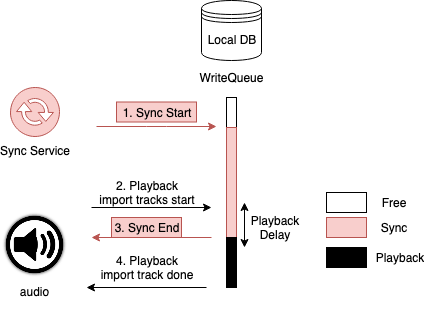

Database Contention: Cirrus/Playlist Sync & Playback¶

A single WriteQueue is utilized on iOS for executing operations on the local database. During the playback process, tracks must be written into the database. However, if a Sync operation is currently underway, the playback process will be delayed until the Sync operation has been completed and the WriteQueue is available. The following diagram aims to provide a summary of this issue.

The Sync Service operates at regular intervals (every 10m on iOS, 30m on Android) and/or in response to specific user actions, such as when the Library is modified (e.g. deleting an album, editing a playlist). The Sync Service interacts with the database.

When the user initiates playback, as illustrated in the sequence diagram above, the process ultimately involves database operations. However, the process is blocked until the Sync Service has completed its database operations.

Once the Sync Service has finished its database operations, playback operations can resume.

At this point, playback database operations are carried out and playback can begin.

In summary, this is intended to demonstrate that Sync operations and the Playback flow are currently closely intertwined, which can lead to contention and result in increased UP-IPD latency.

Latency Challenges from Recently Playback Flow¶

It’s important to note that both Steve and Ed’s scenarios of higher latency were reported from starting playback from Recently Played. Counter-intuitively, Recently Played flow may have higher latency than other flows such as starting playback from Detail Pages (Albums/Playlists). This is because we need to perform following operations serially:

perform service call to fetch track metadata from MuSe (except Stations)

import tracks metadata into Database

interact with Cloud Queue / Amplify

feed tracks to Harley.

Instead when starting playback from Detail Pages, it allows to decouple these operations in two steps (a) opening the detail page will execute #1 and #2 (b) user starting playback will execute #3 and #4. Since there is “user think time” between (a) and (b) the database contention concern are also reduced.

Next Steps¶

Short term¶

Reducing Playback/SyncService DB contention: Essentially moving the

ensureLibraryIntegrityWithChangeContextmethod to be asynchronous so that playback won’t wait until those integrity operations are finished. An initial attempt to fix this issue was implemented by: https://code.amazon.com/reviews/CR-90861585/revisions/2#/diffBatching of Sync Database writes: right now if a large playlist sync occurs, it would hold the WriteQueue until it finishes. If a playback request occurs in the meantime if would need to wait. This is to explore breaking up the Sync Database writes in smaller chunks, so that playback request has the chance to go through without having to finish the entire sync. Addressed by https://code.amazon.com/reviews/CR-91215901/revisions/2#/details

Subtask: Improve client-side Playlist sync checkpoint logic: improvements can be made around the Playlist Sync logic where we may not be using checkpoints correctly. See https://code.amazon.com/packages/IndigoAlcatraz/blobs/43fe1760f39203fae58eb044227d1e7877d09de7/–/CloudPlayer/CloudPlayer/Classes/Sync/Playlists/AMPlaylistsSyncOperation.m#L316. Tracked by https://jira.music.amazon.dev/browse/YFC-1860

Revisit NPS eager launch: Currently we are waiting for CloudQueue and Database operations to be completed before showing NPS. This results in a poor CX where nothing is showing on the screen for at least 1-2s. It can easily be improved by eagerly starting the NPS screen. Tracked by https://issues.amazon.com/MUSICMOBILE-15873

Show tap highlight feedback: Currently most of the UX widgets on iOS do not have an “highlight” feedback upon touch. As Steve called out, this makes it look like the tap was not registered as there’s no reaction to the tap. This change may be applied at Bauhaus UX widget level, tracked by https://issues.amazon.com/issues/MUSICMOBILE-16059

Record client-side network roundtrips: Record timings from the time client sends request until response is received. This will help narrow down cases where service side response may be fast, but intermittent/poor network conditions on the client would be slow. Addressed by: https://code.amazon.com/reviews/CR-90877419/revisions/1#/details

Revisit Sync Service triggers and options: in addition to the periodic sync intervals (10m on iOS, 30m on Android) we are triggering full sync for many operations (such as deleting a single track) which may not be necessary. AMSyncProtocol already provides flags that can be used to only trigger a subset of the sync operations. This would make app network and database resource usage more frugal.

Piecemeal metrics improvement: Introduce e2e latency for IPD and incrementally add piecemeal granularly to help rootcausing reasons of latency

Ongoing as part of Perf work

Logging improvements: Introduce additional logs throughout core playback flows so that we can easily navigate logs and determine user interaction flow and time taken between playback components. Addressed by https://code.amazon.com/reviews/CR-91092146 and https://code.amazon.com/reviews/CR-91110504

Long term¶

Decoupling Persistency Requirements: We should consider evolving the underlying architecture to not require music tracks to be persisted on the Database before being played. Moving away from the concept of “Library” will help us to achieve so. Currently this would be a pretty major refactoring, as lots of the playback stack is based on this legacy approach. Could be a good opportunity to be handled in the cross-platform initiative (React Native/DragonFly) rather than retrofitting the existing Native stack.

Revisiting service-side and client-side Playlist Sync approach: Incremental Sync seems to not have been enabled in years, requiring clients to do full sync. More thought should be put into this around the RN/DragonFly persistency approach.

Removing MuSe from critical playback flow: certain flows such as Artist Detail playback currently involves calling MuSe to fetch artist detail metadata. We should explore whether this call can be removed by a combination of (1) smarter caching (2) ensuring track metadata is provided by upstream service (Nimbly in this case)

Improving at intermittent-network conditions: We need to make our apps better at working in intermittent-networking conditions. Feature QA quality shouldn’t only be verified on high bandwidth network conditions but also on poorer conditions.

FAQs¶

FAQ-1: Is the Amplify Datastore also using the same WriteQueue as the library database? No, Amplify has its own access to its datastore and is not exposed to clients.